Some e-commerce sites let customers write reviews of their products, which other customers can then browse when considering buying a product. I know I’ve read product reviews written by my fellow customers to help me figure out if a product would be true to size, last a long time, or contain an ingredient I’m concerned about.

What if a business could predict which reviews its customers would find helpful? Maybe it could put those reviews first on the page so that readers could get the best information sooner. Maybe the business could note which topics come up in those helpful reviews and revise its product descriptions to contain more of that sort of information. Maybe the business could even identify “super reviewers,” users who are especially good at writing helpful reviews, and offer them incentives to review more products.

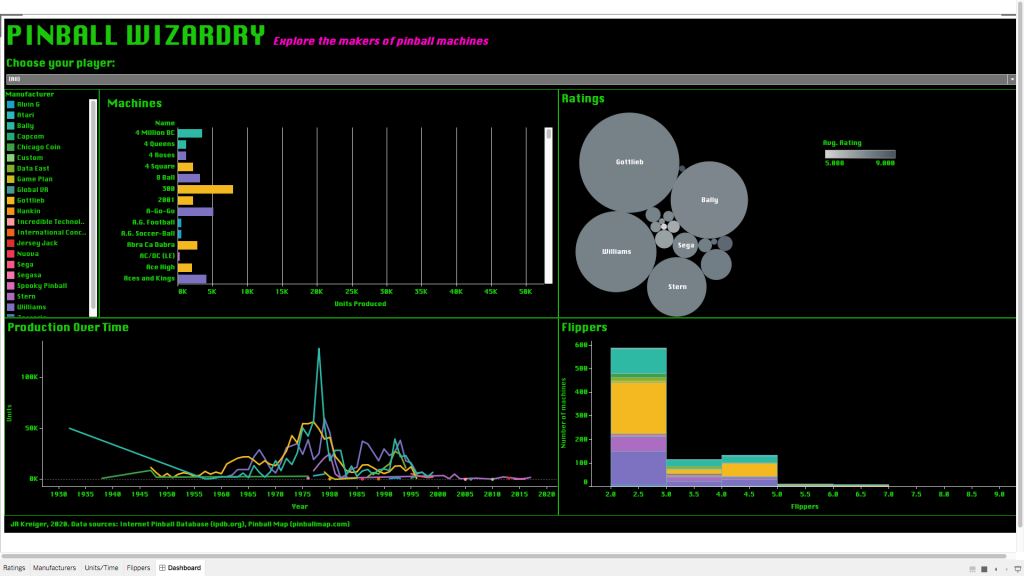

Using a large collection of product reviews from Amazon, I trained a range of machine learning models to try to identify which reviews readers rated as “helpful.” I tried Random Forests, logistic regression, a Support Vector Machine, GRU networks, and LSTM networks, along with a variety of natural language processing (NLP) techniques for preprocessing my data. As it turns it, predicting helpful reviews is pretty hard, but not impossible! To go straight to the code, check out my GitHub repo. To learn more about how I did it, read on.

Continue reading “Predicting the "helpfulness" of peer-written product reviews”